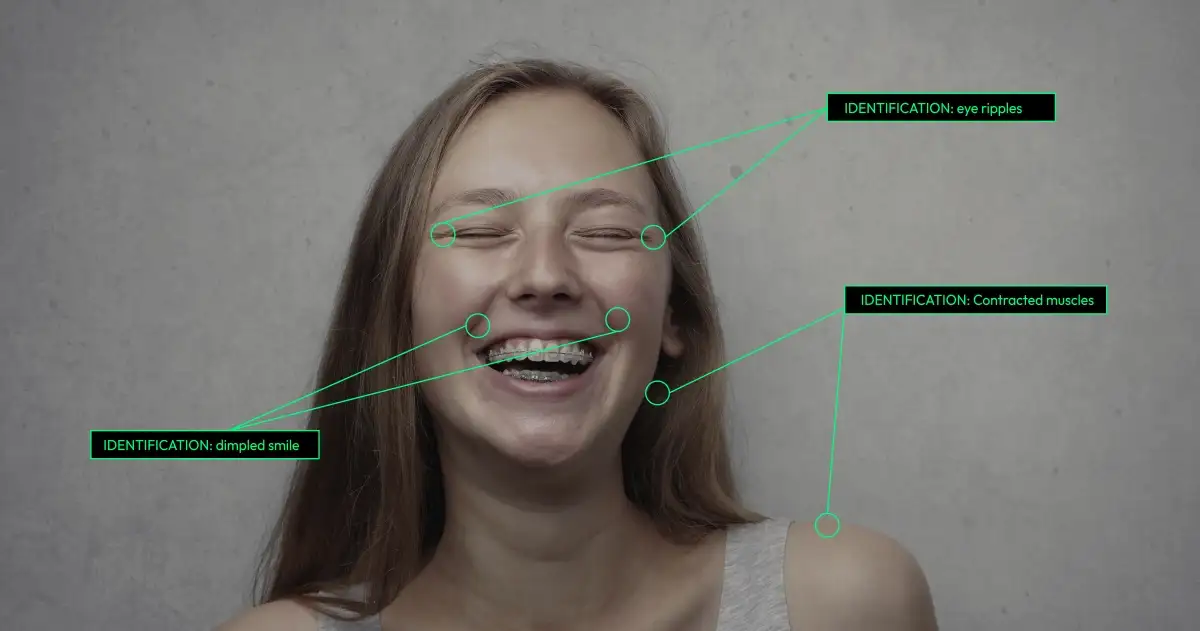

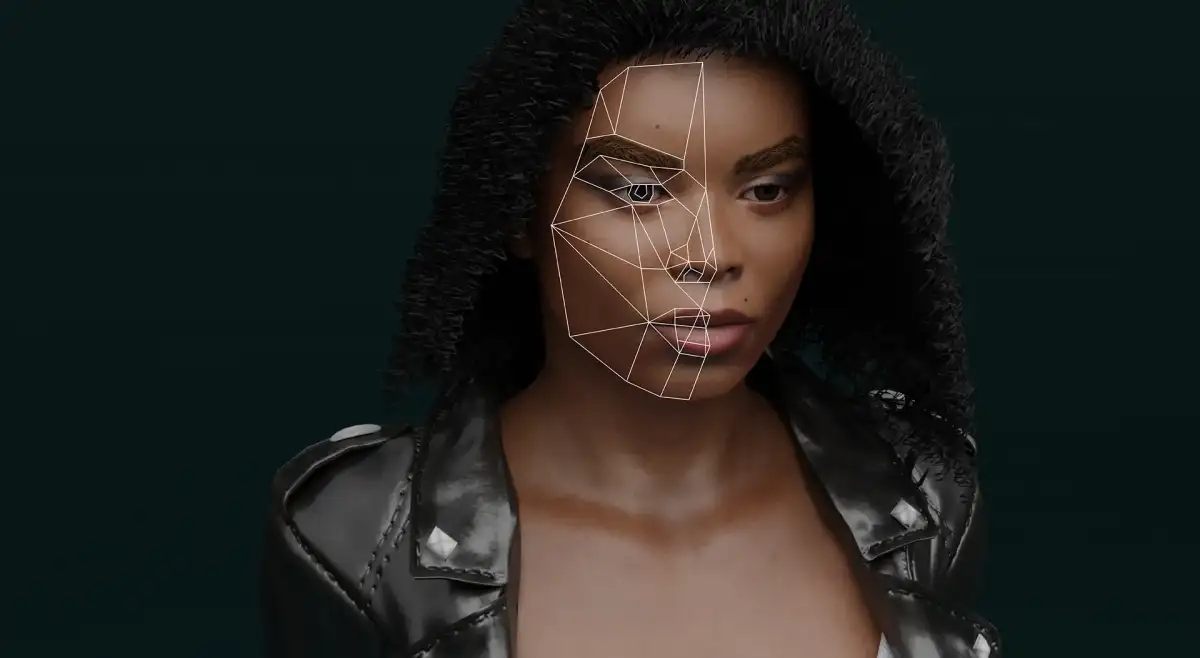

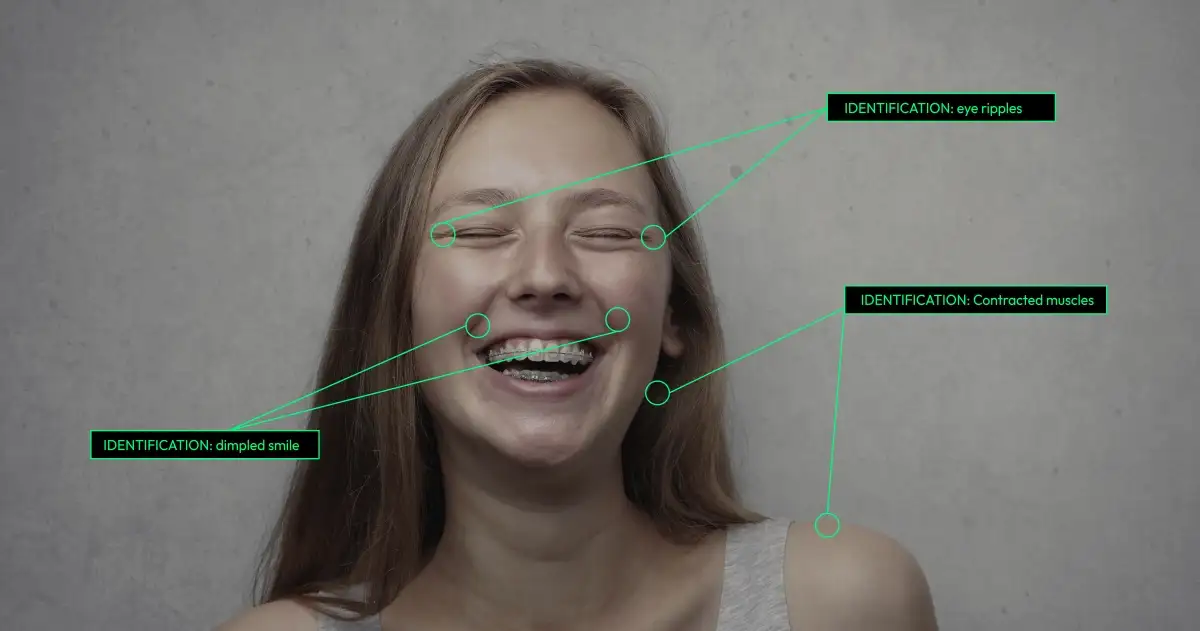

The intersection of collaborative robotics and facial emotion classification presents a promising synergy, where these two fields can mutually enrich one another.

Collaborative robotics endeavors to design robots capable of safe and efficient interactions with humans in shared spaces. In parallel, facial emotion classification focuses on deciphering human facial expressions to identify underlying emotions.

Combining these realms allows the creation of robots not only proficient in tasks alongside humans but also attuned to human emotions, enhancing the naturalness and empathy in human-robot interactions. This amalgamation elevates performance and satisfaction for both parties involved.

In this article, we will showcase a demonstration of a facial emotion classification system operating on the Kria KR260 Robotics Starter Kit

In this article, we will showcase a demonstration of a facial emotion classification system operating on the Kria KR260 Robotics Starter Kit. This platform, designed for scalable and ready-to-use robotics development, serves as the backbone for our project.

We will delve into the key features and advantages of the Kria KR260, outlining the hardware and software components integral to our system. Additionally, we will share the outcomes of our experiments, illustrating how the Kria KR260, coupled with tools like PYNQ and MuseBox, facilitates the creation and deployment of adaptive and intelligent robotic applications.

Significance of Collaborative Robotics:

Collaborative Robotics is a burgeoning field across vital sectors such as Industry, Healthcare, and Consumer markets. Despite the market's expansion, evolving demands necessitate robust hardware solutions. MakarenaLabs, as a partner of AMD, specializes in crafting robotic applications tailored for the innovative Kria KR260 platform.

Furthermore, MakarenaLabs offers Musebox, a framework equipped with extensive APIs and a machine learning stack, streamlining the time-to-market process for AI applications. Specifically, collaborative robotics benefits from advancements in Segmentation and Scene Understanding, pivotal in decision-making and fostering seamless Human-Robot Collaboration.

SETUP

The facial emotion classification system comprises two core elements:

1. MuseBox Platform Utilized for executing diverse AI tasks using frames from a USB camera.

2. PYNQ Platform Facilitates communication with the FPGA within the Kria SOM KR260.

To implement this system, the following components are required:- KR260 FPGA by AMD- PYNQ image based on Petalinux for the KR260 (available in the provided link)- MuseBox library (pre-installed in the provided image)

Facial Emotion Classification Demonstration

Once the setup is complete, accessing the Jupyter Notebooks becomes straightforward. Simply navigate to

:9090 in your web browser. We have prepared a user-friendly Jupyter Notebook interface that is ready for interaction. To initiate and cease the facial emotion classification process, you can do so effortlessly by clicking the Start and Stop buttons provided below:

Conclusion:

Facial emotion classification holds profound significance, contributing significantly to our emotional awareness and regulation, thereby enhancing overall well-being and mental health. Through the analysis of human facial expressions and the recognition of underlying emotions, robots can adjust their behavior and responses in harmony with human emotions. This adaptability extends to aspects such as speed, force, distance, difficulty, challenge, reward, or intervention, fostering a more nuanced and empathetic human-robot interaction experience.

Discover more at www.mklabs.ai.

In this article, we will showcase a demonstration of a facial emotion classification system operating on the Kria KR260 Robotics Starter Kit. This platform, designed for scalable and ready-to-use robotics development, serves as the backbone for our project.

In this article, we will showcase a demonstration of a facial emotion classification system operating on the Kria KR260 Robotics Starter Kit. This platform, designed for scalable and ready-to-use robotics development, serves as the backbone for our project.